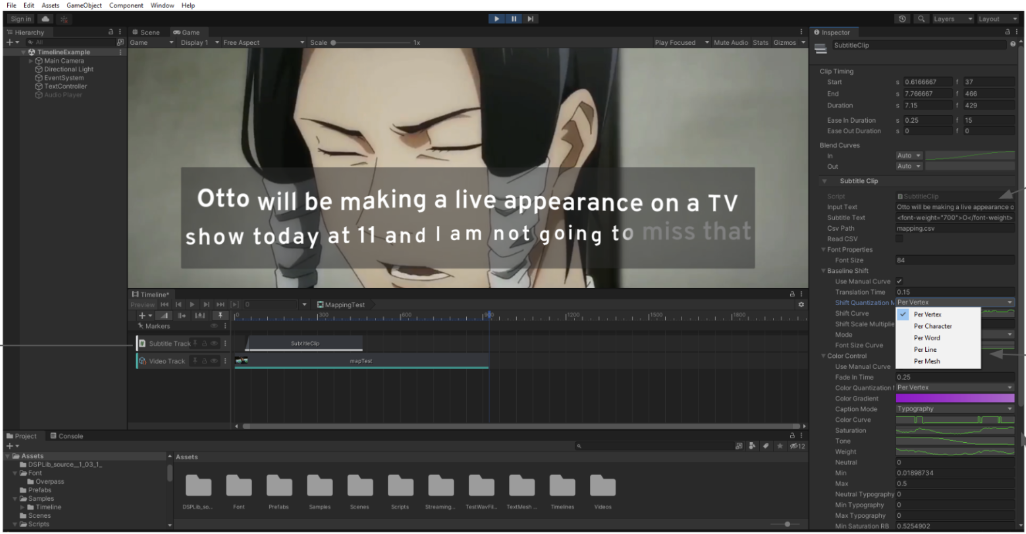

We study and design human–AI systems that address accessibility at multiple levels: supporting communication with and among people with disabilities (e.g., sign language technologies and captioning systems); enabling access to digital information in educational and workplace contexts (e.g., reading support tools and accessible visualizations); and advancing accessibility compliance in the public sector (e.g., dashboards for tracking accessibility-barrier data). My work centers the experiences of people with disabilities and the needs of connected communities (e.g., hearing sign-language users and accessibility auditors).

Short Bio. Saad Hassan is an Assistant Professor in the School of Science and Engineering at Tulane University. He directs the NOLA A11y lab and is affiliated with the Tulane Center for Community Engaged AI. His research spans accessible computing, human-computer interaction (HCI), and computational social science, with a focus on AI-driven technologies that support communication, learning, and creative expression for people with disabilities. Saad has published over 25 research articles in premier HCI and accessibility venues, including CHI, ASSETS, TACCESS, and MobileHCI, as well as leading AI venues such as CVPR, NeurIPS, and EMNLP Findings. He has also received four Honorable Mention and Best Paper nominations at CHI and ASSETS. He earned his Ph.D. in Computing and Information Sciences from the Rochester Institute of Technology, where he was advised by Matt Huenerfauth, and was a recipient of the Duolingo Dissertation Award. He currently serves on the program committees for ASSETS and CHI.

News

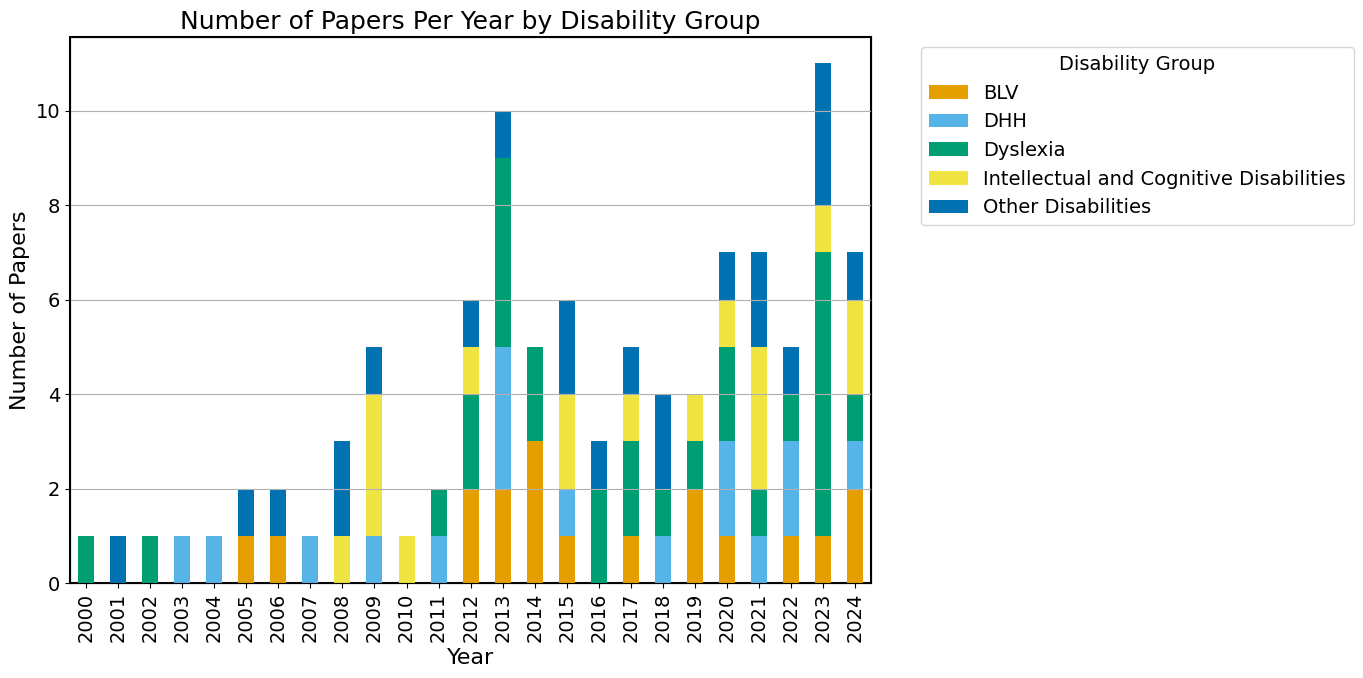

🏅 ASSETS 2025 paper on the review of literature on reading support tools received an honorable mention (best paper nomination) at ASSETS 2025. My students and I will be attending the ASSETS 2025 Conference in person in Denver!

📃 Two submissions to

Posters and Demonstration Track

for

ASSETS 2025

were accepted:

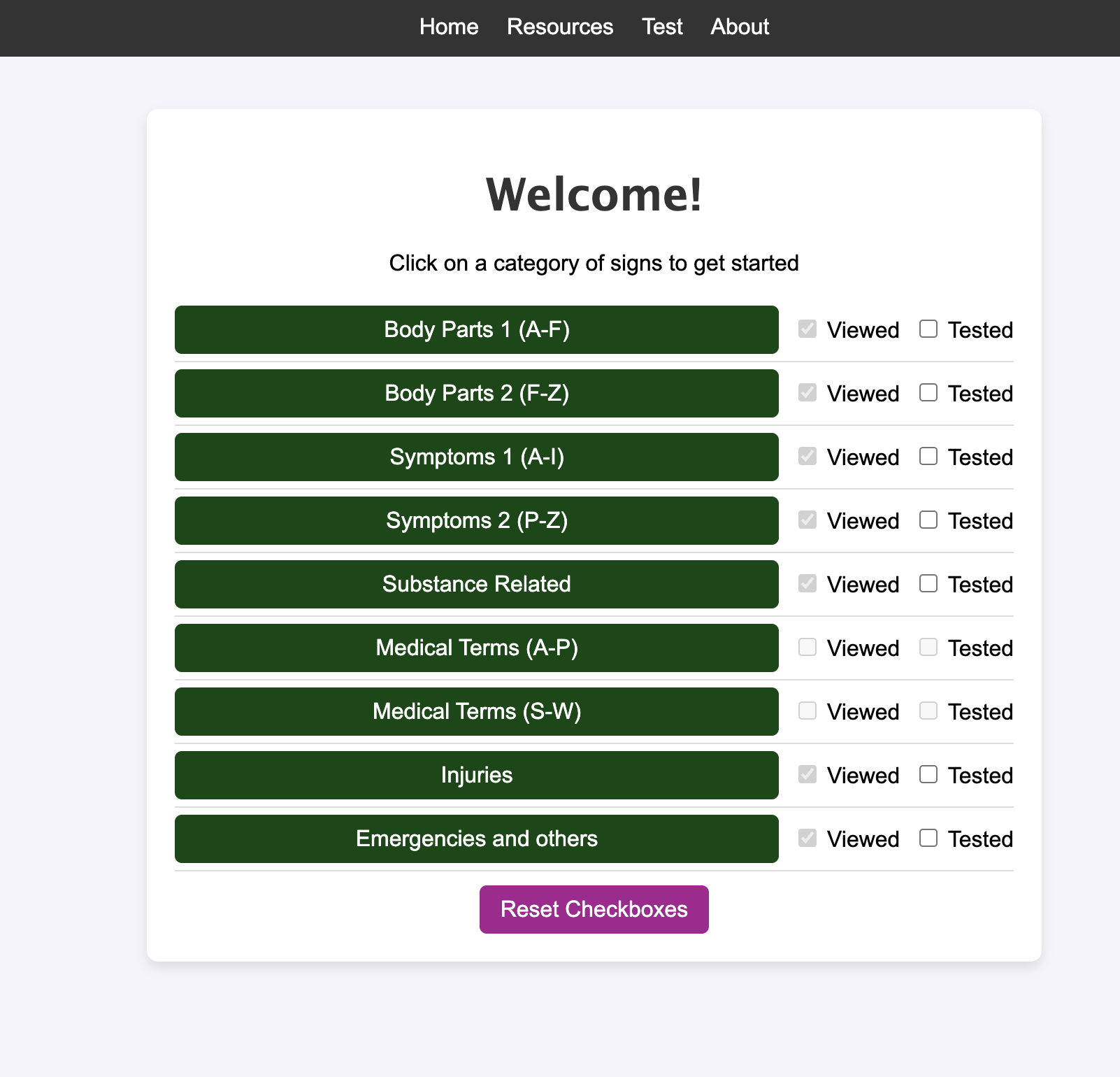

1. Sign language learning tool for emergency medical responders

Led by

Chaelin Kim

with undergraduates Cameron McLaren, Madhangi Krishnan, and Nikhil Modayur.

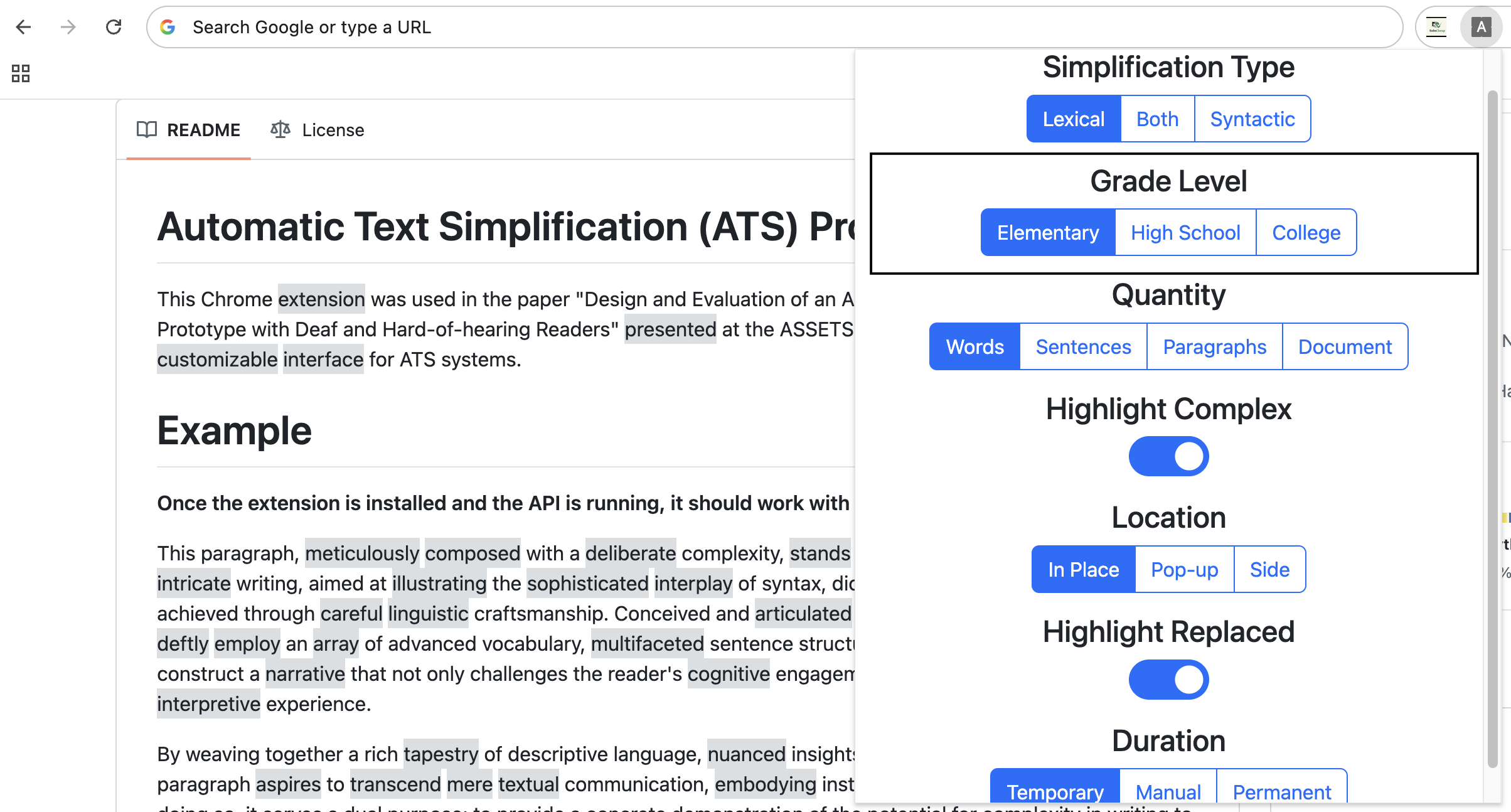

2. Automatic reading support tool for people with disabilities

Led by

Nazmun Nahar Khanom

with undergraduate Aaron Gershkovich and Oliver Alonzo (DePaul University).

🎉 Our workshop proposal on participant recruitment in accessible research was accepted! The workshop will be held virtually at ASSETS 2025 during the week of October 20–26, 2025. Co-organizers: Lloyd May, Khang Dang, Sooyeon Lee, Oliver Alonzo.

📃 A submission on review of literature on reading support tools was accepted to the

Technical Paper Track

of

ASSETS 2025.

This work was done in collaboration with Oliver Alonzo (DePaul University).

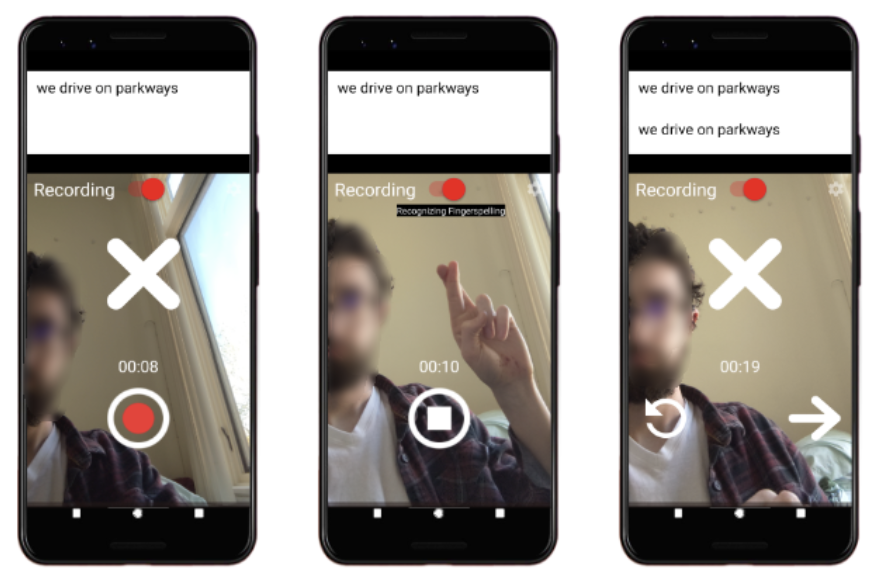

📃 A paper on ASL fingerspelling data collected using mobile phones and a recognition baseline was accepted at

CVPR 2025.

This work was done in collaboration with the

Sign Language Understanding Group at Google Research

and the

Deaf Professional Arts Network

.

📃 A paper on a tactile haptic approach for affective caption was accepted at

CHI 2025

.

This work was led by

Calua Pataca

at

RIT CAIR Lab.

Publications

Teaching

Funding

Research at my lab is currently supported by:

Louisiana Board of Regents

Tulane: Lavin-Bernick Grant; University Senate Committee on Research (with the Provost's Office)

Previous sponsors:

Tulane: Center for Engaged Learning & Teaching (CELT)

Duolingo